Reinhard Wilhelm on some aspects of Informatics close to his heart#

My original area of work was Compilation, the translation of programming languages, from problem-oriented into machine-oriented languages. The “optimization” phase in this translation attempts to improve the efficiency of the program to be translated by transforming it into a more efficient, semantically equivalent program. The preservation of the semantics of the program is important because otherwise the machine program would do something different from what the programmer intended and wrote. However, most of the transformations would change the semantics if not judiciously applied. Certain conditions must be satisfied for the program to make the transformations safely applicable. To check them, an analysis of larger parts of the program may be necessary. Such an analysis is called a Static Analysis since it is applied to the program text before execution, that is, without executing the program on input. A beautiful theory for static analysis, Abstract Interpretation, was developed by our fellow academician Patrick Cousot and and his wife, Radhia, in the late Seventies. This theory provided understanding what the compiler writers had been doing so far when they implemented compilers, but it transcended this area in several directions.Most importantly, it was discovered that the same analysis techniques could be used to verify Safety Properties of programs. Safety properties state that no execution of the program will ever produce a certain undesirable behaviour, for instance divide by zero, produce an overflow of the range of representable numerical values, or exceed the space allocated to it for execution. Such behaviours may cause the program to crash with undesirable consequences for the program’s environment, of which a frustrated user may be a harmless instance and catastrophic and lethal accidents instances to be avoided under all circumstances.

So, static analysis turned from a compilation technique into a verification technique. While compilation is somehow taken as a bread-and-butter technique of interest only to the programmer, verification of computer systems has become a publicly recognized necessity as more and more systems in our daily life depend on the correct functioning of computer systems. No passenger airplane would safely and efficiently fly without computers controlling more or less all the involved subsystems such as engines, navigation, stabilization, communication, and entertainment of the passengers. The same holds for cars, household appliances, multi-media applications, digital cameras, medical appliances, and computer games. The computer systems working in these applications around us are called embedded systems. The user, in general, doesn’t see them. He may only indirectly guess that a computer controls the system he is actually using.

One particular area of my work is in this area, the verification of required properties of embedded systems. I will go into more detail about one of these properties. Many of these embedded systems have to satisfy time constraints, that is, they should not only produce correct reactions to their input, but they should produce the reactions within given time bounds. These time bounds are dictated by the physics of the system they control. The computer controlling the airbags in a car, for instance, has to select and trigger the right airbags within 10mSec in case of a collision. Otherwise, the driver and his front passenger would hit the steering wheel or the dashboard before the airbag is filled.

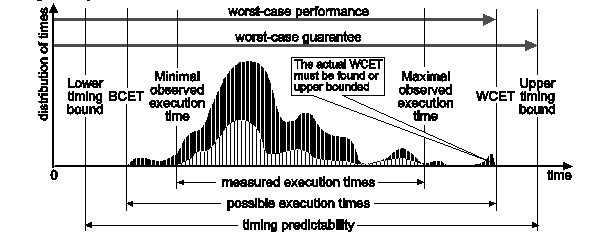

So, why is it a problem to show that the software running on an embedded computer will always produce a reaction within the given time limit, a problem that when one solves it will elevate one into a serious institution such as the Academia Europaea? Well, this is the result of badly oriented research and development in one branch of Informatics, namely computer architecture. According to rather safe estimations, 98% of all deployed processor chips work in embedded systems and only 2% in PCs, workstations, servers, and high-performance computers. Check this for yourself. You may drive around 50 processors in your car, employ one each in your CD-, your DVD-players, a few in your television set, one in your washing machine, and, alas!, you may own a laptop. However, our colleagues in computer architecture have directed their research efforts in accordance with the industry towards the PC you have on your desk rather than the computer you drive around in your car. This has led to modern high-performance processors executing your enormous work load, caused by reading email or editing WORD files, with amazing speed. The downside of it is that this speed is the result of architectural inventions that make the task of verifying the satisfaction of timing constraints a veritable challenge. This is due to the high variance in execution times. The time it takes to execute a single machine instruction, the elementary commands of the computer, can vary by a factor of 100 or more. The architects have aimed at and succeeded in improving the average-case performance.

That’s where static analysis comes into play! Static analysis can efficiently analyse all executions of the program, i.e., independently of the input and the initial execution state. It can be efficient because it is cleverly using approximation in a sound way, that is, it uses approximation only on the safe side; all statements derived about the program’s behaviour must hold for all executions, but the analysis may also say, “I don’t know”, a perfectly reliable statement.

The aeronautics industry has very strict certification rules. Any passenger airplane has to first pass a high-effort certification process. The European Airworthiness Authorities accepted our tool for the certification of several time-critical subsystems of the Airbus A380. Thereby, the tool acquired the status of “validated tool” for these avionics applications. This amounts to the accolade for any tool. Our tool is the only one world-wide to have acquired this status.

The methods we have developed, as also many of our fellow academicians like Patrick Cousot, Josef Sifakis, Manfred Broy, Tony Hoare, Gérard Berry, Jean-Raymond Abrial, to name a few, are called Formal Methods, for many American colleagues a typical area of Euroscience, somewhat of a synonym for unprofitable l’art pour l’art. On the other side, it may be responsible for the dominance of European research and development in Embedded Systems.

Back to the Informatics Section